User Tutorial

User Tutorial

The OpenMS Developers

July 19, 2021

Creative Commons Attribution 4.0 International

(CC BY 4.0)

Before we get started we will install OpenMS and KNIME. If you take part in a training session you will have likely received an USB stick from us that contains the required data and software. If we provide laptops with the software you may of course skip the installation process and continue reading the next section.

Please choose the directory that matches your operating system and execute the installer.

For example for Windows you call

on macOS you call

and follow the instructions. For the OpenMS installation on macOS, you need to accept the license drag and drop the OpenMS folder into your Applications folder.

Note: Due to increasing security measures for downloaded apps (e.g. path randomization) on

macOS you might need to open TOPPView.app and TOPPAS.app while holding

and

accept the warning. If the app still does not open, you might need to move them from

to

e.g. your Desktop and back.

On Linux you can extract KNIME to a folder of your choice and for TOPPView you need to install OpenMS via your package manager or build it on your own with the instructions under www.openms.de/documentation.

Note: If you have installed OpenMS on Linux or macOS via your package manager (for instance by installing the OpenMS-2.6.0-Linux.deb package), then you need to set the OPENMS_DATA_PATH variable to the directory containing the shared data (normally /usr/share/OpenMS). This must be done prior to running any TOPP tool.

If you are working through this tutorial at home you can get the installers under the following links:

Choose the installers for the platform you are working on.

Each MS instrument vendor has one or more formats for storing the acquired data. Converting these data into an open format (preferably mzML) is the very first step when you want to work with open-source mass spectrometry software. A freely available conversion tool is MSConvert, which is part of a ProteoWizard installation. All files used in this tutorial have already been converted to mzML by us, so you do not need to perform the data conversion yourself. However, we provide a small raw file so you can try the important step of raw data conversion for yourself.

Note: The OpenMS installation package for Windows automatically installs ProteoWizard, so you do not need to download and install it separately. Due to restrictions from the instrument vendors, file format conversion for most formats is only possible on Windows systems. In practice, performing the conversion to mzML on the acquisition PC connected to the instrument is usually the most convenient option.

To convert raw data to mzML using ProteoWizard you can either use MSConvertGUI (a

graphical user interface) or msconvert (a simple command line tool). Both tools are available

in: . You

can find a small RAW file on the USB stick

.

MSConvertGUI (see Fig. 1) exposes the main parameters for data conversion in a convenient graphical user interface.

The msconvert command line tool has no user interface but offers more options than the application MSConvertGUI. Additionally, since it can be used within a batch script, it allows converting large numbers of files and can be much more easily automatized.

To convert and pick the file raw_data_file.RAW you may write:

in your command line.

To convert all RAW files in a folder may write:

Note: To display all options you may type .

Additional information is available on the ProteoWizard web page.

Recently the open-source platform independent ThermoRawFileParser tool has been developed. While Proteowizard and MSConvert are only available for Windows systems this new tool allows to also convert raw data on Mac or Linux.

Note: To learn more about the ThermoRawFileParser and how to use it in KNIME see Section 2.4.7

Visualizing the data is the first step in quality control, an essential tool in understanding the data, and of course an essential step in pipeline development. OpenMS provides a convenient viewer for some of the data: TOPPView.

We will guide you through some of the basic features of TOPPView. Please familiarize yourself with the key controls and visualization methods. We will make use of these later throughout the tutorial. Let’s start with a first look at one of the files of our tutorial data set. Note that conceptually, there are no differences in visualizing metabolomic or proteomic data. Here, we inspect a simple proteomic measurement:

Note: On macOS, due to a bug in one of the external libraries used by OpenMS, you will see a small window of the 3D mode when switching to 2D. Close the 3D tab in order to get rid of it.

Dependent on your data MS/MS spectra can be visualized as well (see Fig.5) . You can do so,

by double-click on the MS/MS spectrum shown in scan view.

Using OpenMS in combination with KNIME, you can create, edit, open, save, and run

workflows that combine TOPP tools with the powerful data analysis capabilities of KNIME.

Workflows can be created conveniently in a graphical user interface. The parameters of all

involved tools can be edited within the application and are also saved as part of the

workflow. Furthermore, KNIME interactively performs validity checks during the

workflow editing process, in order to make it more difficult to create an invalid workflow.

Throughout most parts of this tutorial you will use KNIME to create and execute workflows.

The first step is to make yourself familiar with KNIME. Additional information on basic usage

of KNIME can be found on the KNIME Getting Started page. However, the most important

concepts will also be reviewed in this tutorial.

Before we can start with the tutorial we need to install all the required extensions for KNIME. Since KNIME 3.2.1 the program automatically detects missing plugins when you open a workflow but to make sure that the right source for the OpenMS plugin is chosen, please follow the instructions here. First, we install some additional extensions that are required by our OpenMS nodes or used in the Tutorials e.g. for visualization and file handling.

In addition, we need to install R for the statistical downstream analysis. Choose the directory

that matches your operating system, double-click the R installer and follow the instructions. We

recommend to use the default settings whenever possible. On macOS you also need to install

XQuartz from the same directory.

Afterwards open your R installation. If you use Windows, you will find an ”R x64 3.6.X” icon

on your desktop. If you use macOS, you will find R in your Applications folder. In R type the

following lines (you might also copy them from the file

folder on the USB stick):

install.packages('Rserve',,"http://rforge.net/",type="source") install.packages("Cairo") install.packages("devtools") install.packages("ggplot2") install.packages("ggfortify") if (!requireNamespace("BiocManager", quietly = TRUE)) install.packages("BiocManager") BiocManager::install() BiocManager::install(c("MSstats"))

In KNIME, click on ,

select the category

and

set the ”Path to R Home” to your installation path. You can use the following settings, if you

installed R as described above:

You are now ready to install the OpenMS nodes.

We included a custom KNIME update site to install the OpenMS KNIME plugins from the USB stick. If you do not have a stick available, please see below.

Alternatively, you can try these steps that will install the OpenMS KNIME plugins from the internet. Note that download can be slow.

A workflow is a sequence of computational steps applied to a single or multiple input data to

process and analyze the data. In KNIME such workflows are implemented graphically by

connecting so-called nodes. A node represents a single analysis step in a workflow. Nodes have

input and output ports where the data enters the node or the results are provided for other

nodes after processing, respectively. KNIME distinguishes between different port types,

representing different types of data. The most common representation of data in KNIME

are tables (similar to an excel sheet). Ports that accept tables are marked with a

small triangle. For OpenMS nodes, we use a different port type, so called file ports,

representing complete files. Those ports are marked by a small blue box. Filled blue

boxes represent mandatory inputs and empty blue boxes optional inputs. The same

holds for output ports, despite you can deactivate them in the configuration dialog

(double-click on node) under the OutputTypes tab. After execution, deactivated

ports will be marked with a red cross and downstream nodes will be inactive (not

configurable).

A typical OpenMS workflow in KNIME can be divided in two conceptually different parts:

Moreover, nodes can have three different states, indicated by the small traffic light below the node.

If the node execution fails, the node will switch to the red state. Other anomalies and warnings like missing information or empty results will be presented with a yellow exclamation mark above the traffic light. Most nodes will be configured as soon as all input ports are connected. Some nodes need to know about the output of the predecessor and may stay red until the predecessor was executed. If nodes still remain in a red state, probably additional parameters have to be provided in the configuration dialog that can neither be guessed from the data nor filled with sensible defaults. In this case, or if you want to customize the default configuration in general, you can open the configuration dialog of a node with a double-click on the node. For all OpenMS nodes you will see a configuration dialog like the one shown in Figure 6.

Note: OpenMS distinguishes between normal parameters and advanced parameters.

Advanced parameters are by default hidden from the users since they should only

rarely be customized. In case you want to have a look at the parameters or need to

customize them in one of the tutorials you can show them by clicking on the checkbox

in

the lower part of the dialog. Afterwards the parameters are shown in a light gray

color.

The dialog shows the individual parameters, their current value and type, and, in the lower part of the dialog, the documentation for the currently selected parameter. Please also note the tabs on the top of the configuration dialog. In the case of OpenMS nodes, there will be another tab called OutputTypes. It contains dropdown menus for every output port that let you select the output filetype that you want the node to return (if the tool supports it). For optional output ports you can select Inactive such that the port is crossed out after execution and the associated generation of the file and possible additional computations are not performed. Note that this will deactivate potential downstream nodes connected to this port.

The graphical user interface (GUI) of KNIME consists of different components or so-called panels that are shown in Figure 7. We will briefly introduce the individual panels and their purposes below.

Workflows can easily be created by a right click in the Workflow Explorer followed by clicking

on .

To be able to share a workflow with others, KNIME supports the import and export of

complete workflows. To export a workflow, select it in the Workflow Explorer and select

.

KNIME will export workflows as a knwf file containing all the information on nodes, their

connections, and their parameter configuration. Those knwf files can again be imported by

selecting

.

Note: For your convenience we added all workflows discussed in this tutorial to the

folder on the USB Stick. Additionally, the workflow files can be found on our GitHub

repository. If you want to check your own workflow by comparing it to the solution or got stuck,

simply import the full workflow from the corresponding knwf file and after that double-click it

in your KNIME Workflow repository to open it.

In this tutorial, a lot of the workflows will be created based on the workflow from a previous task. To keep the intermediate workflows, we suggest you create copies of your workflows so you can see the progress. To create a copy of your workflow, save it, close it and follow the next steps.

Note: To rename a workflow it has to be closed, too.

Let us now start with the creation of our very first, very simple workflow. As a first step, we will gather some basic information about the data set before starting the actual development of a data analysis workflow. This minimal workflow can also be used to check if all requirements are met and that your system is compatible.

Note: In case you are unsure about which node port to use, hovering the cursor over the port in question will display the port name and what kind of input it expects.

The complete workflow is shown in Figure 8. FileInfo can produce two different kinds of output files.

Note: Make sure to use the “tiny” version this time, not “small”, for the sake of faster workflow execution.

Workflows are typically constructed to process a large number of files automatically. As a simple example, consider you would like to convert multiple Thermo Raw files into the mzML format. We will now modify the workflow to compute the same information on three different files and then write the output files to a folder.

In case you had trouble to understand what ZipLoopStart and ZipLoopEnd do - here is a brief explanation:

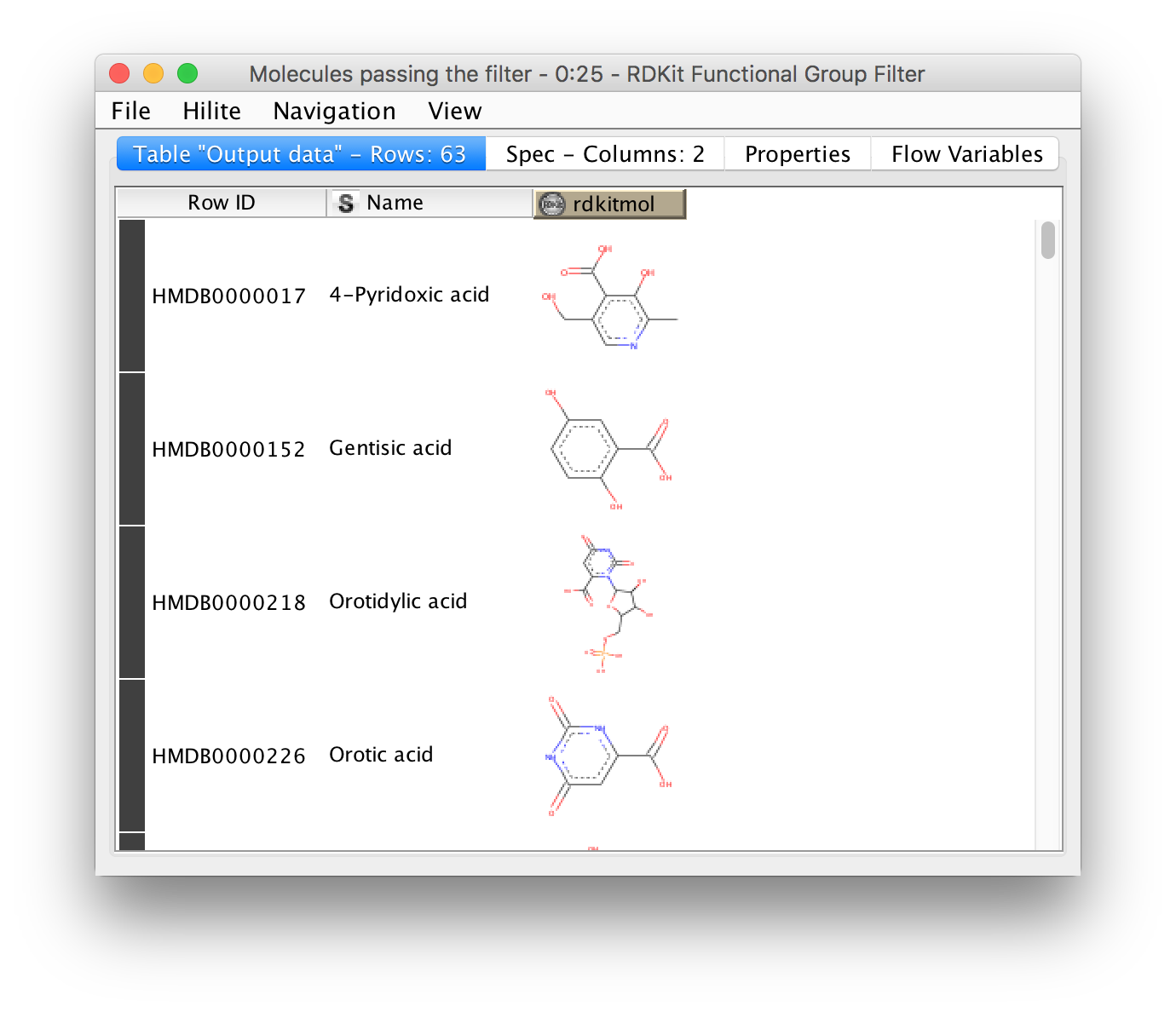

Metabolomics analyses often involve working with chemical structures. Popular cheminformatic toolkits such as RDKit [7] or CDK [8] are available as KNIME plugins and allow us to work with chemical structures directly from within KNIME. In particular, we will use KNIME and RDKit to visualize a list of compounds and filter them by predefined substructures. Chemical structures are often represented as SMILES (Simplified molecular input line entry specification), a simple and compact way to describe complex chemical structures as text. For example, the chemical structure of L-alanine can be written as the SMILES string C[C@H](N)C(O)=O. As we will discuss later, all OpenMS tools that perform metabolite identification will report SMILES as part of their result, which can then be further processed and visualized using RDKit and KNIME.

Perform the following steps to build the workflow shown in in Fig. 10. You will use this workflow to visualize a list of SMILES strings and filter them by predefined substructures:

Workflows can get rather complex and may contain dozens or even hundreds of nodes. KNIME provides a simple way to improve handling and clarity of large workflows:

Metanodes allow to bundle several nodes into a single Metanode.

Task

Select multiple nodes (e.g. all nodes of the ZipLoop including the start and end node). To select

a set of nodes, draw a rectangle around them with the left mouse button or hold

to

add/remove single nodes from the selection. Pro-tip: There is a

option when you right-click a node in a loop, that does exactly that for you. Then, open the

context menu (right-click on a node in the selection) and select

.

Enter a caption for the Metanode. The previously selected nodes are now contained in the

Metanode. Double-clicking on the Metanode will display the contained nodes in a new tab

window.

Task

Create the Metanode to let it behave like an encapsulated single node. First select the

Metanode, open the context menu (right-click) and select . The

differences between Metanodes and their wrapped counterparts are marginal (and only apply

when exposing user inputs and workflow variables). Therefore we suggest to use standard

Metanodes to clean up your workflow and cluster common subparts until you actually notice

their limits.

Task

Undo the packaging. First select the (Wrapped) Metanode, open the context menu (right-click)

and select .

KNIME provides a large number of nodes for a wide range of statistical analysis, machine learning, data processing, and visualization. Still, more recent statistical analysis methods, specialized visualizations or cutting edge algorithms may not be covered in KNIME. In order to expand its capabilities beyond the readily available nodes, external scripting languages can be integrated. In this tutorial, we primarily use scripts of the powerful statistical computing language R. Note that this part is considered advanced and might be difficult to follow if you are not familiar with R. In this case you might skip this part.

R View (Table) allows to seamlessly include R scripts into KNIME. We will demonstrate on a minimal example how such a script is integrated.

Task

First we need some example data in KNIME, which we will generate using the Data Generator

node. You can keep the default settings and execute the node. The table contains four columns,

each containing random coordinates and one column containing a cluster number (Cluster_0 to

Cluster_3). Now place a R View (Table) node into the workflow and connect the

upper output port of the Data Generator node to the input of the R View (Table)

node. Right-click and configure the node. If you get an error message like ”Execute

failed: R_HOME does not contain a folder with name ’bin’.” or ”Execution failed: R

Home is invalid.”: please change the R settings in the preferences. To do so open

and

enter the path to your R installation (the folder that contains the bin directory (e.g.,

).

If you get an error message like: ”Execute failed: Could not find Rserve package. Please install it

in your R installation by running

”install.packages(’Rserve’)”.” You may need to run your R binary as administrator (In windows

explorer: right-click ”Run as administrator”) and enter install.packages(’Rserve’) to install the

package.

If R is correctly recognized we can start writing an R script. Consider that we are interested in

plotting the first and second coordinates and color them according to their cluster number. In R

this can be done in a single line. In the R View (Table) text editor, enter the following code:

plot(x=knime.in$Universe_0_0, y=knime.in$Universe_0_1, main="Plotting column Universe_0_0 vs. Universe_0_1", col=knime.in$"Cluster Membership")

Explanation: The table provided as input to the R View (Table) node is available as R data.frame with name knime.in. Columns (also listed on the left side of the R View window) can be accessed in the usual R way by first specifying the data.frame name and then the column name (e.g. knime.in$Universe_0_0). plot is the plotting function we use to generate the image. We tell it to use the data in column Universe_0_0 of the dataframe object knime.in (denoted as knime.in$Universe_0_0) as x-coordinate and the other column knime.in$Universe_0_1 as y-coordinate in the plot. main is simply the main title of the plot and col the column that is used to determine the color (in this case it is the Cluster Membership column).

Now press the and

buttons.

Note: Note that we needed to put some extra quotes around Cluster Membership. If we omit those, R would interpret the column name only up to the first space (knime.in$Cluster) which is not present in the table and leads to an error. Quotes are regularly needed if column names contain spaces, tabs or other special characters like $ itself.

In this chapter, we will build a workflow with OpenMS / KNIME to quantify a label-free experiment. Label-free quantification is a method aiming to compare the relative amounts of proteins or peptides in two or more samples. We will start from the minimal workflow of the last chapter and, step-by-step, build a label-free quantification workflow.

As a start, we will extend the minimal workflow so that it performs a peptide identification using the OMSSA [9] search engine. Since OpenMS version 1.10, OMSSA is included in the OpenMS installation, so you do not need to download and install it yourself.

Note: You might also want to save your new identification workflow under a different name. Have a look at Section 2.4.6 for information on how to create copies of workflows.

Note: Opening the output file of OMSSAAdapter (the idXML file) directly is also possible, but the direct visualization of an idXML file is less useful.

In the next step, we will tweak the parameters of OMSSA to better reflect the instrument’s accuracy. Also, we will extend our pipeline with a false discovery rate (FDR) filter to retain only those identifications that will yield an FDR of < 1 %.

Note: Whenever you change the configuration of a node, the node as well as all its successors will be reset to the Configured state (all node results are discarded and need to be recalculated by executing the nodes again).

Note: To add a modification click on the empty value field in the configuration dialog to

open the list editor dialog. In the new dialog click . Then select the newly added modification to open the drop down list where you can

select the correct modification.

Note: You can direct the files of an Input File node to more than just one destination port.

Note: The finished identification workflow is now sufficiently complex that we might

want to encapsulate it in a Metanode. For this, select all nodes inside the ZipLoop

(including the Input File node) and right-click to select and name it ID. Metanodes are useful when you construct even larger workflows and

want to keep an overview.

Note: If you are ahead of the tutorial or later on, you can further improve your FDR identification workflow by a so-called consensus identification using several search engines. Otherwise, just continue with section 3.3.

It has become widely accepted that the parallel usage of different search engines can increase peptide identification rates in shotgun proteomics experiments. The ConsensusID algorithm is based on the calculation of posterior error probabilities (PEP) and a combination of the normalized scores by considering missing peptide sequences.

Note: By default, X!Tandem takes additional enzyme cutting rules into consideration (besides the specified tryptic digest). Thus for the tutorial files, you have to set PeptideIndexer’s enzyme →specificity parameter to none to accept X!Tandems non-tryptic identifications as well.

In the end the ID processing part of the workflow can be collapsed into a Metanode to keep the structure clean (see Figure 13).

Now that we have successfully constructed a peptide identification pipeline, we can add quantification capabilities to our workflow.

Note: The chromatographic RT range of a feature is about 30-60 s and its m/z range

around 2.5 m/z in this dataset. If you have trouble zooming in on a feature, select

the full RT range and zoom only into the m/z dimension by holding down

(

on macOS) and repeatedly dragging a narrow box from the very left to the very

right.

So far, we successfully performed peptide identification as well as quantification on individual LC-MS runs. For differential label-free analyses, however, we need to identify and quantify corresponding signals in different experiments and link them together to compare their intensities. Thus, we will now run our pipeline on all three available input files and extend it a bit further, so that it is able to find and link features across several runs.

Note: MapAlignerPoseClustering consumes several featureXML files and its output should still be several featureXML files containing the same features, but with the transformed RT values. In its configuration dialog, make sure that OutputTypes is set to featureXML.

Note: You can specify the desired column separation character in the parameter settings (by default, it is set to “ ” (a space)). The output file of TextExporter can also be opened with external tools, e.g., Microsoft Excel, for downstream statistical analyses.

For downstream analysis of the quantification results within the KNIME environment, you can

use the ConsensusTextReader node in

instead of the Output Folder node to convert the output into a KNIME table (indicated by a

triangle as output port). After running the node you can view the KNIME table by

right-clicking on the ConsensusTextReader and selecting

.

Every row in this table corresponds to a so-called consensus feature, i.e., a peptide signal

quantified across several runs. The first couple of columns describe the consensus feature as a

whole (average RT and m/z across the maps, charge, etc.). The remaining columns describe the

exact positions and intensities of the quantified features separately for all input samples (e.g.,

intensity_0 is the intensity of the feature in the first input file). The last 11 columns contain

information on peptide identification.

Advanced downstream data analysis of quantitative mass spectrometry-based proteomics data can be performed using MSstats [11]. This tool can be combined with an OpenMS preprocessing pipeline (e.g. in KNIME). The OpenMS experimental design is used to present the data in an MSstats-conformant way for the analysis. Here, we give an example how to utilize these resources when working with quantitative label-free data. We describe how to use OpenMS and MSstats for the analysis of the ABRF iPRG2015 dataset [12].

Note: Reanalysing the full dataset from scratch would take too long. In this tutorial session, we will focus on just the conversion process and the downstream analysis.

The R package MSstats can be used for statistical relative quantification of proteins and peptides in mass spectrometry-based proteomics. Supported are label-free as well as labeled experiments in combination with data-dependent, targeted and data-independent acquisition. Inputs can be identified and quantified entities (peptides or proteins) and the output is a list of differentially abundant entities, or summaries of their relative abundance. It depends on accurate feature detection, identification and quantification which can be performed e.g. by an OpenMS workflow.

In general MSstats can be used for data processing & visualization, as well as statistical modeling & inference. Please see [11] and http://msstats.org for further information.

The iPRG (Proteome Informatics Research Group) dataset from the study in 2015, as described in [12], aims at evaluating the effect of statistical analysis software on the accuracy of results on a proteomics label-free quantification experiment. The data is based on four artificial samples with known composition (background: 200 ng S. cerevisiae). These were spiked with different quantities of individual digested proteins, whose identifiers were masked for the competition as yeast proteins in the provided database (see Table 1).

| Samples

| |||||||

| Name | Origin | Molecular Weight | 1 | 2 | 3 | 4 | |

| A | Ovalbumin | Egg White | 45 KD | 65 | 55 | 15 | 2 |

| B | Myoglobin | Equine Heart | 17 KD | 55 | 15 | 2 | 65 |

| C | Phosphorylase b | Rabbit Muscle | 97 KD | 15 | 2 | 65 | 55 |

| D | Beta-Glactosidase | Escherichia Coli | 116 KD | 2 | 65 | 55 | 15 |

| E | Bovine Serum Albumin | Bovine Serum | 66 KD | 11 | 0.6 | 10 | 500 |

| F | Carbonic Anhydrase | Bovine Erythrocytes | 29 KD | 10 | 500 | 11 | 0.6 |

The iPRG LFQ workflow (Fig. 18) consists of an identification and a quantification part. The

identification is achieved by searching the computationally calculated MS2 spectra from

a sequence database (Input File node, here with the given database from iPRG:

)

against the MS2 from the original data (Input Files node with all mzMLs following

)

using the OMSSAAdapter.

Note: If you want to reproduce the results at home, you have to download the iPRG data in mzML format and perform Peakpicking on it. Or convert and pick the raw data with msconvert.

Afterwards the results are scored using the FalseDiscoveryRate node and filtered to obtain only unique peptides (IDFilter) since MSstats does not support shared peptides, yet. The quantification is achieved by the FeatureFinderCentroided, which performs the feature detection on the samples (maps). In the end the quantification results are combined with the filtered identification results (IDMapper). In addition, a linear retention time alignment is performed (MapAlignerPoseClustering), followed by the feature linking process (FeatureLinkerUnlabledQT). The ConsensusMapNormalizer is used to normalize the intensities via robust regression over a set of maps and the IDConflictResolver assures that only one identification (best score) is associated with a feature. The output of this workflow is a consensusXML file, which can now be converted using the MSstatsConverter (see section 3.5.5).

As mentioned before, the downstream analysis can be performed using MSstats. In this case an experimental design has to be specified for the OpenMS workflow. The structure of the experimental design used in OpenMS in case of the iPRG dataset is specified in Table 2. An explanation of the variables can be found in Table 3.

| Fraction_Group | Fraction | Spectra_Filepath | Label | Sample |

| 1 | 1 | Sample1-A | 1 | 1 |

| 2 | 1 | Sample1-B | 1 | 2 |

| 3 | 1 | Sample1-C | 1 | 3 |

| 4 | 1 | Sample2-A | 1 | 4 |

| 5 | 1 | Sample2-B | 1 | 5 |

| 6 | 1 | Sample2-C | 1 | 6 |

| 7 | 1 | Sample3-A | 1 | 7 |

| 8 | 1 | Sample3-B | 1 | 8 |

| 9 | 1 | Sample3-C | 1 | 9 |

| 10 | 1 | Sample4-A | 1 | 10 |

| 11 | 1 | Sample4-B | 1 | 11 |

| 12 | 1 | Sample4-C | 1 | 12 |

| Sample | MSstats_Condition | MSstats_BioReplicate | ||

| 1 | 1 | 1 | ||

| 2 | 1 | 2 | ||

| 3 | 1 | 3 | ||

| 4 | 2 | 4 | ||

| 5 | 2 | 5 | ||

| 6 | 2 | 6 | ||

| 7 | 3 | 7 | ||

| 8 | 3 | 8 | ||

| 9 | 3 | 9 | ||

| 10 | 4 | 10 | ||

| 11 | 4 | 11 | ||

| 12 | 4 | 12 |

| variables | value |

| Fraction_Group | Index used to group fractions and source files. |

| Fraction | 1st, 2nd, .., fraction. Note: All runs must have the same number of fractions. |

| Spectra_Filepath | Path to mzML files |

| Label | label-free: always 1 |

| TMT6Plex: 1...6 |

|

| SILAC with light and heavy: 1..2 |

|

| Sample | Index of sample measured in the specified label X, in fraction Y of fraction group Z. |

| Conditions | Further specification of different conditions (e.g. MSstats_Condition; MSstats_BioReplicate) |

The conditions are highly dependent on the type of experiment and on which kind of analysis you want to perform. For the MSstats analysis the information which sample belongs to which condition and if there are biological replicates are mandatory. This can be specified in further condition columns as explained in Table 3. For a detailed description of the MSstats-specific terminology, see their documentation e.g. in the R vignette.

Conversion of the OpenMS-internal consensusXML format (which is an aggregation of quantified and possibly identified features across several MS-maps) to a table (in MSstats-conformant CSV format) is very easy. First, create a new KNIME workflow. Then, run the MSstatsConverter node with a consensusXML and the manually created (e.g. in Excel) experimental design as inputs (loaded via Input File nodes). The first input can be found in

This file was generated by using the

workflow (seen in Fig. 18). The second input is specified in

.

Adjust the parameters in the config dialog of the converter to match the given experimental design file and to use a simple summing for peptides that elute in multiple features (with the same charge state, i.e. m/z value).

| parameter | value |

| msstats_bioreplicate | MSstats_Bioreplicate |

| msstats_condition | MSstats_Condition |

| labeled_reference_peptides | false |

| retention_time_summarization_method (advanced) | sum |

The downstream analysis of the peptide ions with MSstats is performed in several steps. These steps are reflected by several KNIME R nodes, which consume the output of MSstatsConverter. The outline of the workflow is shown in Figure 19.

We load the file resulting from MSStatsConverter either by saving it with an Output File node and reloading it with the File Reader. Or for advanced users, you can use a URI Port to Variable node and use the variable in the File Reader config dialog (V button - located on the right of the ”Browse...” button) to read from the temporary file.

Preprocessing

The first node (Table to R) loads MSstats as well as the data from the previous KNIME node

and performs a preprocessing step on the input data. The inline R script (that needs to be

pasted into the config dialog of the node)

allows further preparation of the data produced by MSstatsConverter before the

actual analysis is performed. In this example, the lines with proteins, which were

identified with only one feature, were retained. Alternatively they could be removed.

In the same node, most importantly, the following line:

transforms the data into a format that is understood by MSstats. Here, dataProcess is one of the most important functions that the R package provides. The function performs the following steps:

In this example, we just state that missing intensity values are represented by the ’NA’ string.

Group Comparison

The goal of the analysis is the determination of differentially-expressed proteins among the

different conditions C1-C4. We can specify the comparisons that we want to make in a

comparison matrix. For this, let’s consider the following example:

|

| (3.1) |

This matrix has the following properties:

We can generate such a matrix in R using the following code snippet in (for example) a new R to R node that takes over the R workspace from the previous node with all its variables:

comparison1<-matrix(c(-1,1,0,0),nrow=1) comparison2<-matrix(c(-1,0,1,0),nrow=1) comparison3<-matrix(c(-1,0,0,1),nrow=1) comparison4<-matrix(c(0,-1,1,0),nrow=1) comparison5<-matrix(c(0,-1,0,1),nrow=1) comparison6<-matrix(c(0,0,-1,1),nrow=1) comparison <- rbind(comparison1, comparison2, comparison3, comparison4, comparison5, comparison6) row.names(comparison)<-c("C2-C1","C3-C1","C4-C1","C3-C2","C4-C2","C4-C3")

Here, we assemble each row in turn, concatenate them at the end, and provide row names for labeling the rows with the respective condition.

In MSstats, the group comparison is then performed with the following line:

No more parameters need to be set for performing the comparison.

Result Processing

In a next R to R node, the results are being processed. The following code snippet:

test.MSstats.cr <- test.MSstats$ComparisonResult # Rename spiked ins to A,B,C.... pnames <- c("A", "B", "C", "D", "E", "F") names(pnames) <- c( "sp|P44015|VAC2_YEAST", "sp|P55752|ISCB_YEAST", "sp|P44374|SFG2_YEAST", "sp|P44983|UTR6_YEAST", "sp|P44683|PGA4_YEAST", "sp|P55249|ZRT4_YEAST" ) test.MSstats.cr.spikedins <- bind_rows( test.MSstats.cr[grep("P44015", test.MSstats.cr$Protein),], test.MSstats.cr[grep("P55752", test.MSstats.cr$Protein),], test.MSstats.cr[grep("P44374", test.MSstats.cr$Protein),], test.MSstats.cr[grep("P44683", test.MSstats.cr$Protein),], test.MSstats.cr[grep("P44983", test.MSstats.cr$Protein),], test.MSstats.cr[grep("P55249", test.MSstats.cr$Protein),] ) # Rename Proteins test.MSstats.cr.spikedins$Protein <- sapply(test.MSstats.cr.spikedins$Protein, function(x) {pnames[as.character(x)]}) test.MSstats.cr$Protein <- sapply(test.MSstats.cr$Protein, function(x) { x <- as.character(x) if (x %in% names(pnames)) { return(pnames[as.character(x)]) } else { return("") } })

will rename the spiked-in proteins to A,B,C,D,E, and F and remove the names of other proteins, which will be beneficial for the subsequent visualization, as for example performed in Figure 20.

Export

The last four nodes, each connected and making use of the same workspace from

the last node, will export the results to a textual representation and volcano plots

for further inspection. Firstly, quality control can be performed with the following

snippet:

The code for this snippet is embedded in the first output node of the workflow. The resulting boxplots show the log2 intensity distribution across the MS runs.

The second node is an R View (Workspace) node that returns a Volcano plot which displays differentially expressed proteins between conditions C2 vs. C1. The plot is described in more detail in the following Result section. This is how you generate it:

The last two nodes export the MSstats results as a KNIME table for potential further analysis or for writing it to a (e.g. csv) file. Note that you could also write output inside the Rscript if you are familiar with it. Use the following for an R to Table node exporting all results:

And this for an R to Table node exporting only results for the spike-ins:

An excerpt of the main result of the group comparison can be seen in Figure 20.

The Volcano plots show differently expressed spiked-in proteins. In the left plot, which shows

the fold-change C2-C1, we can see the proteins D and F (sp|P44983|UTR6_YEAST and

sp|P55249|ZRT4_YEAST) are significantly over-expressed in C2, while the proteins B,C, and E

(sp|P55752|ISCB_YEAST, sp|P55752|ISCB_YEAST, and sp|P44683|PGA4_YEAST) are

under-expressed. In the right plot, which shows the fold-change ratio of C3 vs. C2, we can see

the proteins E and C (sp|P44683|PGA4_YEAST and sp|P44374|SFG2_YEAST) over-expressed and

the proteins A and F (sp|P44015|VAC2_YEAST and sp|P55249|ZRT4_YEAST) under-expressed.

The plots also show further differentially-expressed proteins, which do not belong to the

spiked-in proteins.

The full analysis workflow can be found under.

In the last chapter, we have successfully quantified peptides in a label-free experiment. As a

next step, we will further extend this label-free quantification workflow by protein inference and

protein quantification capabilities. This workflow uses some of the more advanced concepts of

KNIME, as well as a few more nodes containing R code. For these reasons, you will not have to

build it yourself. Instead, we have already prepared and copied this workflow to the USB sticks.

Just import into

KNIME via the menu entry

and

double-click the imported workflow in order to open it.

Before you can execute the workflow, you again have to correct the locations of the files in the Input Files nodes (don’t forget the one for the FASTA database inside the “ID” meta node). Try and run your workflow by executing all nodes at once.

We have made the following changes compared to the original label-free quantification workflow from the last chapter:

In the following, we will explain the subworkflow contained in the Protein inference with FidoAdapter meta node.

For downstream analysis on the protein ID level in KNIME, it is again necessary to convert the idXML-file-format result generated from FidoAdapter into a KNIME table.

ROC Curves (Receiver Operating Characteristic curves) are graphical plots that

visualize sensitivity (true-positive rate) against fall-out (false positive rate). They

are often used to judge the quality of a discrimination method like e.g., peptide or

protein identification engines. ROC Curve already provides the functionality of drawing

ROC curves for binary classification problems. When configuring this node, select the

opt_global_target_decoy column as the class (i.e. target outcome) column. We want to find out,

how good our inferred protein probability discriminates between them, therefore

add

best_search_engine_score[1] (the inference engine score is treated like a peptide search engine

score) to the list of ”Columns containing positive class probabilities”. View the plot by

right-clicking and selecting . A

perfect classifier has an area under the curve (AUC) of 1.0 and its curve touches the upper left

of the plot. However, in protein or peptide identification, the ground-truth (i.e., which target

identifications are true, which are false) is usually not known. Instead, so called pseudo-ROC

Curves are regularly used to plot the number of target proteins against the false discovery rate

(FDR) or its protein-centric counterpart, the q-value. The FDR is approximated by using the

target-decoy estimate in order to distinguish true IDs from false IDs by separating target IDs

from decoy IDs.

ROC curves illustrate the discriminative capability of the scores of IDs. In the case of protein identifications, Fido produces the posterior probability of each protein as the output score. However, a perfect score should not only be highly discriminative (distinguishing true from false IDs), it should also be “calibrated” (for probability indicating that all IDs with reported posterior probability scores of 95% should roughly have a 5% probability of being false. This implies that the estimated number of false positives can be computed as the sum of posterior error probabilities ( = 1 - posterior probability) in a set, divided by the number of proteins in the set. Thereby a posterior-probability-estimated FDR is computed which can be compared to the actual target-decoy FDR. We can plot calibration curves to help us visualize the quality of the score (when the score is interpreted as a probability as Fido does), by comparing how similar the target-decoy estimated FDR and the posterior probability estimated FDR are. Good results should show a close correspondence between these two measurements, although a non-correspondence does not necessarily indicate wrong results.

The calculation is done by using a simple R script in R snippet. First, the target decoy protein FDR is computed as the proportion of decoy proteins among all significant protein IDs. Then posterior probabilistic-driven FDR is estimated by the average of the posterior error probability of all significant protein IDs. Since FDR is the property for a group of protein IDs, we can also calculate a local property for each protein: the q-value of a certain protein ID is the minimum FDR of any groups of protein IDs that contain this protein ID. We plot the protein ID results versus two different kinds of FDR estimates in R View(Table) (see Fig. 22).

In the last chapters, we identified and quantified peptides in a label-free experiment. In this section, we would like to introduce a possible workflow for the analysis of isobaric data.

Let’s have a look at the workflow (see Fig 23)

The full analysis workflow can be found here: .

The workflow has four input nodes. The first for the experimental design to allow for MSstatsTMT compatible export (MSstatsConverter). The second for the .mzML files with the centroided spectra from the isobaric labeling experiment and the third one for the .fasta database used for identification. The last one allows to specify an output path for the plots generated by the R View, which runs MSstatsTMT (I). The quantification (A) is performed using the IsobaricAnalzyer. The tool is able to extract and normalize quantitative information from TMT and iTRAQ data. The values can be assessed from centroided MS2 or MS3 spectra (if available). Isotope correction is performed based on the specified correction matrix (as provided by the manufacturer). The identification (C) is applied as known from the previous chapters by using database search and a target-decoy database.

To reduce the complexity of the data for later inference the q-value estimation and FDR

filtering is performed on PSM level for each file individually (B). Afterwards the identification

(PSM) and quantiative information is combined using the IDMapper. After the processing of all

available files, the intermediate results are aggregated (FileMerger - D). All PSM results are

used for score estimation and protein inference (Epifany) (E). For detailed information about

protein inference please see Chaper 4. Then, decoys are removed and the inference

results are filtered via a protein group FDR. Peptide level results can be exported via

MzTabExporter (F), protein level results can be obtained via the ProteinQuantifier (G)

or the results can exported (MSstatsConverter - H) and further processed with

the following R pipeline to allow for downstream processing using MSstatsTMT.

Please import the workflow from into

KNIME via the menu entry

and

double-click the imported workflow in order to open it. Before you can execute the workflow,

you have to correct the locations of the files in the Input Files nodes (don’t forget the one for

the FASTA database inside the “ID” meta node). Try and run your workflow by executing all

nodes at once.

The R package MSstatsTMT can be used for protein significance analysis in shotgun mass

spectrometry-based proteomic experiments with tandem mass tag (TMT) labeling.

MSstatsTMT provides functionality for two types of analysis & their visualization: Protein

summarization based on peptide quantification and Model-based group comparison to

detect significant changes in abundance. It depends on accurate feature detection,

identification and quantification which can be performed e.g. by an OpenMS workflow.

In general MSstatsTMT can be used for data processing & visualization, as well as statistical

modeling. Please see [13] and http://msstats.org/msstatstmt/ for further information.

There is also a very helpful online lecture and tutorial for MSstatsTMT from the May Institute Workshop 2020. Please see https://youtu.be/3CDnrQxGLbA

We are using the MSV000084264 ground truth dataset, which consits of TMT10plex controlled mixes of different concentrated UPS1 peptides spiked into SILAC HeLa peptides measured in a dilution series https://www.omicsdi.org/dataset/massive/MSV000084264. Figure 24 shows the experimental design. In this experiment 5 different TMT10plex mixtures – different labeling strategies – were analysed. These were measured in triplicates represented by the 15 MS runs (3 runs each). The example data, database and experimental design to run the workflow can be found here https://abibuilder.informatik.uni-tuebingen.de/archive/openms/Tutorials/Data/isobaric_MSV000084264/.

The experimental design in table format allows for MSstatsTMT compatible export. The design is represented by two tables. The first one 4 represents the overall structure of the experiment in terms of samples, fractions, labels and fraction groups. The second one 5 adds to the first by specifying specific conditions, biological replicates as well as mixtures and label for each channel. For additional information about the experimental design please see Table 3 in Chapter 3.5.4.

| Spectra_Filepath | Fraction | Label | Fraction_Group | Sample |

| 161117_SILAC_HeLa_UPS1_TMT10_SPS_MS3_Mixture1_01.mzML | 1 | 1 | 1 | 1 |

| 161117_SILAC_HeLa_UPS1_TMT10_SPS_MS3_Mixture1_01.mzML | 1 | 2 | 1 | 2 |

| 161117_SILAC_HeLa_UPS1_TMT10_SPS_MS3_Mixture1_01.mzML | 1 | 3 | 1 | 3 |

| 161117_SILAC_HeLa_UPS1_TMT10_SPS_MS3_Mixture1_01.mzML | 1 | 4 | 1 | 4 |

| 161117_SILAC_HeLa_UPS1_TMT10_SPS_MS3_Mixture1_01.mzML | 1 | 5 | 1 | 5 |

| 161117_SILAC_HeLa_UPS1_TMT10_SPS_MS3_Mixture1_01.mzML | 1 | 6 | 1 | 6 |

| 161117_SILAC_HeLa_UPS1_TMT10_SPS_MS3_Mixture1_01.mzML | 1 | 7 | 1 | 7 |

| 161117_SILAC_HeLa_UPS1_TMT10_SPS_MS3_Mixture1_01.mzML | 1 | 8 | 1 | 8 |

| 161117_SILAC_HeLa_UPS1_TMT10_SPS_MS3_Mixture1_01.mzML | 1 | 9 | 1 | 9 |

| 161117_SILAC_HeLa_UPS1_TMT10_SPS_MS3_Mixture1_01.mzML | 1 | 10 | 1 | 10 |

| 161117_SILAC_HeLa_UPS1_TMT10_SPS_MS3_Mixture1_02.mzML | 1 | 1 | 2 | 11 |

| 161117_SILAC_HeLa_UPS1_TMT10_SPS_MS3_Mixture1_02.mzML | 1 | 2 | 2 | 12 |

| 161117_SILAC_HeLa_UPS1_TMT10_SPS_MS3_Mixture1_02.mzML | 1 | 3 | 2 | 13 |

| 161117_SILAC_HeLa_UPS1_TMT10_SPS_MS3_Mixture1_02.mzML | 1 | 4 | 2 | 14 |

| 161117_SILAC_HeLa_UPS1_TMT10_SPS_MS3_Mixture1_02.mzML | 1 | 5 | 2 | 15 |

| 161117_SILAC_HeLa_UPS1_TMT10_SPS_MS3_Mixture1_02.mzML | 1 | 6 | 2 | 16 |

| 161117_SILAC_HeLa_UPS1_TMT10_SPS_MS3_Mixture1_02.mzML | 1 | 7 | 2 | 17 |

| 161117_SILAC_HeLa_UPS1_TMT10_SPS_MS3_Mixture1_02.mzML | 1 | 8 | 2 | 18 |

| 161117_SILAC_HeLa_UPS1_TMT10_SPS_MS3_Mixture1_02.mzML | 1 | 9 | 2 | 19 |

| 161117_SILAC_HeLa_UPS1_TMT10_SPS_MS3_Mixture1_02.mzML | 1 | 10 | 2 | 20 |

| 161117_SILAC_HeLa_UPS1_TMT10_SPS_MS3_Mixture1_03.mzML | 1 | 1 | 3 | 21 |

| 161117_SILAC_HeLa_UPS1_TMT10_SPS_MS3_Mixture1_03.mzML | 1 | 2 | 3 | 22 |

| 161117_SILAC_HeLa_UPS1_TMT10_SPS_MS3_Mixture1_03.mzML | 1 | 3 | 3 | 23 |

| 161117_SILAC_HeLa_UPS1_TMT10_SPS_MS3_Mixture1_03.mzML | 1 | 4 | 3 | 24 |

| 161117_SILAC_HeLa_UPS1_TMT10_SPS_MS3_Mixture1_03.mzML | 1 | 5 | 3 | 25 |

| 161117_SILAC_HeLa_UPS1_TMT10_SPS_MS3_Mixture1_03.mzML | 1 | 6 | 3 | 26 |

| 161117_SILAC_HeLa_UPS1_TMT10_SPS_MS3_Mixture1_03.mzML | 1 | 7 | 3 | 27 |

| 161117_SILAC_HeLa_UPS1_TMT10_SPS_MS3_Mixture1_03.mzML | 1 | 8 | 3 | 28 |

| 161117_SILAC_HeLa_UPS1_TMT10_SPS_MS3_Mixture1_03.mzML | 1 | 9 | 3 | 29 |

| 161117_SILAC_HeLa_UPS1_TMT10_SPS_MS3_Mixture1_03.mzML | 1 | 10 | 3 | 30 |

| Sample | MSstats_Condition | MSstats_BioReplicate | MSstats_Mixture | LabelName |

| 1 | Norm | Norm | 1 | 126 |

| 2 | 0.667 | 0.667 | 1 | 127N |

| 3 | 0.125 | 0.125 | 1 | 127C |

| 4 | 0.5 | 0.5 | 1 | 128N |

| 5 | 1 | 1 | 1 | 128C |

| 6 | 0.125 | 0.125 | 1 | 129N |

| 7 | 0.5 | 0.5 | 1 | 129C |

| 8 | 1 | 1 | 1 | 130N |

| 9 | 0.667 | 0.667 | 1 | 130C |

| 10 | Norm | Norm | 1 | 131 |

| 11 | Norm | Norm | 1 | 126 |

| 12 | 0.667 | 0.667 | 1 | 127N |

| 13 | 0.125 | 0.125 | 1 | 127C |

| 14 | 0.5 | 0.5 | 1 | 128N |

| 15 | 1 | 1 | 1 | 128C |

| 16 | 0.125 | 0.125 | 1 | 129N |

| 17 | 0.5 | 0.5 | 1 | 129C |

| 18 | 1 | 1 | 1 | 130N |

| 19 | 0.667 | 0.667 | 1 | 130C |

| 20 | Norm | Norm | 1 | 131 |

| 21 | Norm | Norm | 1 | 126 |

| 22 | 0.667 | 0.667 | 1 | 127N |

| 23 | 0.125 | 0.125 | 1 | 127C |

| 24 | 0.5 | 0.5 | 1 | 128N |

| 25 | 1 | 1 | 1 | 128C |

| 26 | 0.125 | 0.125 | 1 | 129N |

| 27 | 0.5 | 0.5 | 1 | 129C |

| 28 | 1 | 1 | 1 | 130N |

| 29 | 0.667 | 0.667 | 1 | 130C |

| 30 | Norm | Norm | 1 | 131 |

After running the worklfow the MSstatsConverter will convert the OpenMS output in addition with the experimental design to a file (.csv) which can be processed by using MSstatsTMT.

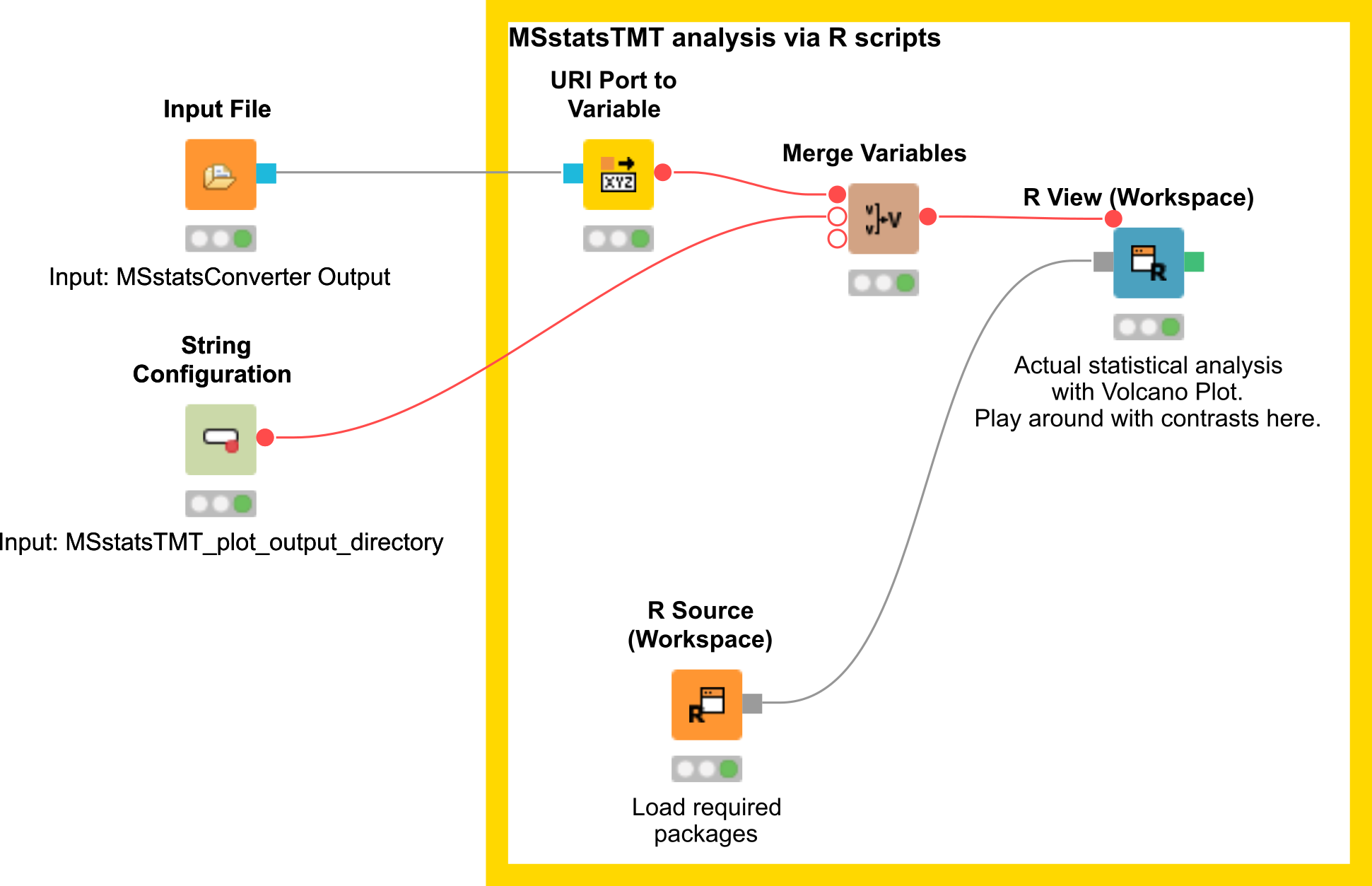

Here, we depict the analysis by MSstatsTMT using a segment of the isobaric analysis workflow

(Fig. 25 ). The segment is available as .

There are two input nodes, the first one takes the result (.csv) from the MSstatsConverter and the second a path to the directory where the plots generated by MSstatsTMT should be saved. The R source node loads the required packages, such as dplyr for data wrangling, MSstatsTMT for analysis and MSstats for plotting. The inputs are further processed in the R View node.

Here, the data of the Input File is loaded into R using the flow variable [”URI-0”]:

The OpenMStoMSstatsTMTFormat function preprocesses the OpenMS report and converts it into the required input format for MSstatsTMT, by filtering based on unique peptides and measurments in each MS run.

Afterwards different normalization steps are performed (global, protein, runs) as well as data imputation by using the msstats method. In addition peptide level data is summarized to protein level data.

There a lot of different possibilities to configure this method please have a look at the MSstatsTMT package for additional detailed information http://bioconductor.org/packages/release/bioc/html/MSstatsTMT.html

The next step is the comparions of the different conditions, here either a pairwise comparision can be performed or a confusion matrix can be created. The goal is to detect and compare the UPS peptides spiked in at different concentrations.

# prepare contrast matrix unique(quant.data$Condition) comparison<-matrix(c(-1,0,0,1, 0,-1,0,1, 0,0,-1,1, 0,1,-1,0, 1,-1,0,0), nrow=5, byrow = T) # Set the names of each row row.names(comparison)<- contrasts <- c("1-0125", "1-05", "1-0667", "05-0667", "0125-05") # Set the column names colnames(comparison)<- c("0.125", "0.5", "0.667", "1")

The constructed confusion matrix is used in the groupComparisonTMT function to test for significant changes in protein abundance across conditions based on a family of linear mixed-effects models in TMT experiments.

In the next step the comparison can be plotted using the groupComparisonPlots function by MSstats

Here, we have a example output of the R View, which depicts the significant regulated UPS proteins in the comparison of 125 to 05 (Fig. 26).

All plots are saved to the in the beginning specified output directory in addition.

The isobaric analysis does not always has to be performed on protein level, for example for

phosphoproteomics studies one is usually interested on the peptide level - in addition inference

on peptides with post-translational modification is not straight forward. Here, we present and

additonal workflow on peptide level, which can potentially be adapted and used for such cases.

Please see .

Quantification and identification of chemical compounds are basic tasks in metabolomic studies. In this tutorial session we construct a UPLC-MS based, label-free quantification and identification workflow. Following quantification and identification we then perform statistical downstream analysis to detect quantification values that differ significantly between two conditions. This approach can, for example, be used to detect biomarkers. Here, we use two spike-in conditions of a dilution series (0.5 mg/l and 10.0 mg/l, male blood background, measured in triplicates) comprising seven isotopically labeled compounds. The goal of this tutorial is to detect and quantify these differential spike-in compounds against the complex background.

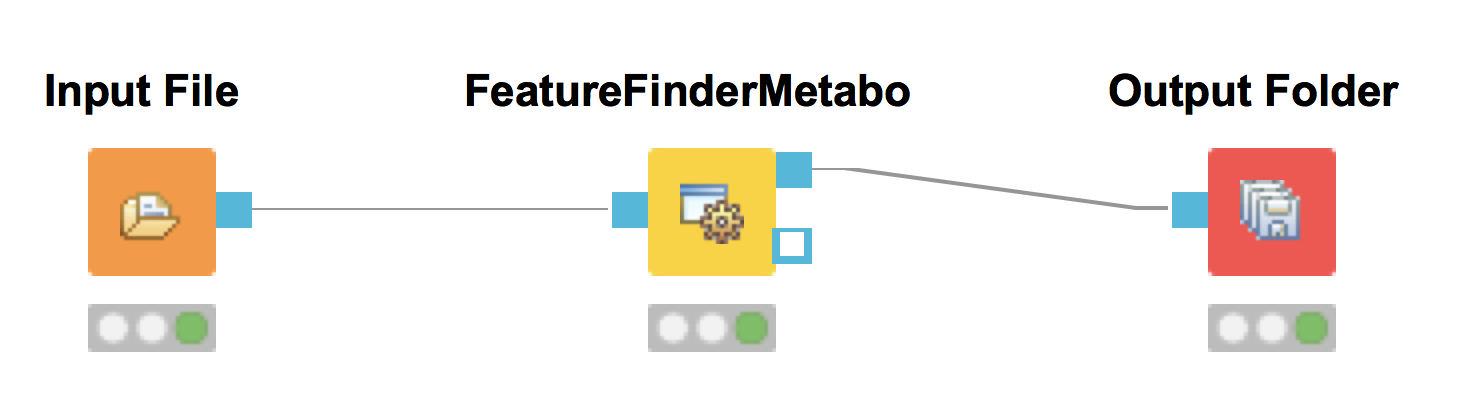

For the metabolite quantification we choose an approach similar to the one used for peptides, but this time based on the OpenMS FeatureFinderMetabo method. This feature finder again collects peak picked data into individual mass traces. The reason why we need a different feature finder for metabolites lies in the step after trace detection: the aggregation of isotopic traces belonging to the same compound ion into the same feature. Compared to peptides with their averagine model, small molecules have very different isotopic distributions. To group small molecule mass traces correctly, an aggregation model tailored to small molecules is thus needed.

In the following advanced parameters will be highlighted. These parameter can be altered

if the field in the specific tool is activated (right bottom corner - see 2.4.2).

| parameter | value |

| algorithm →common →chrom_fwhm | 8.0 |

| algorithm →mtd →trace_termination_criterion | sample_rate |

| algorithm →mtd →min_trace_length | 3.0 |

| algorithm →mtd →max_trace_length | 600.0 |

| algorithm →epd →width_filtering | off |

| algorithm →ffm →report_convex_hulls | true |

The parameters change the behavior of FeatureFinderMetabo as follows:

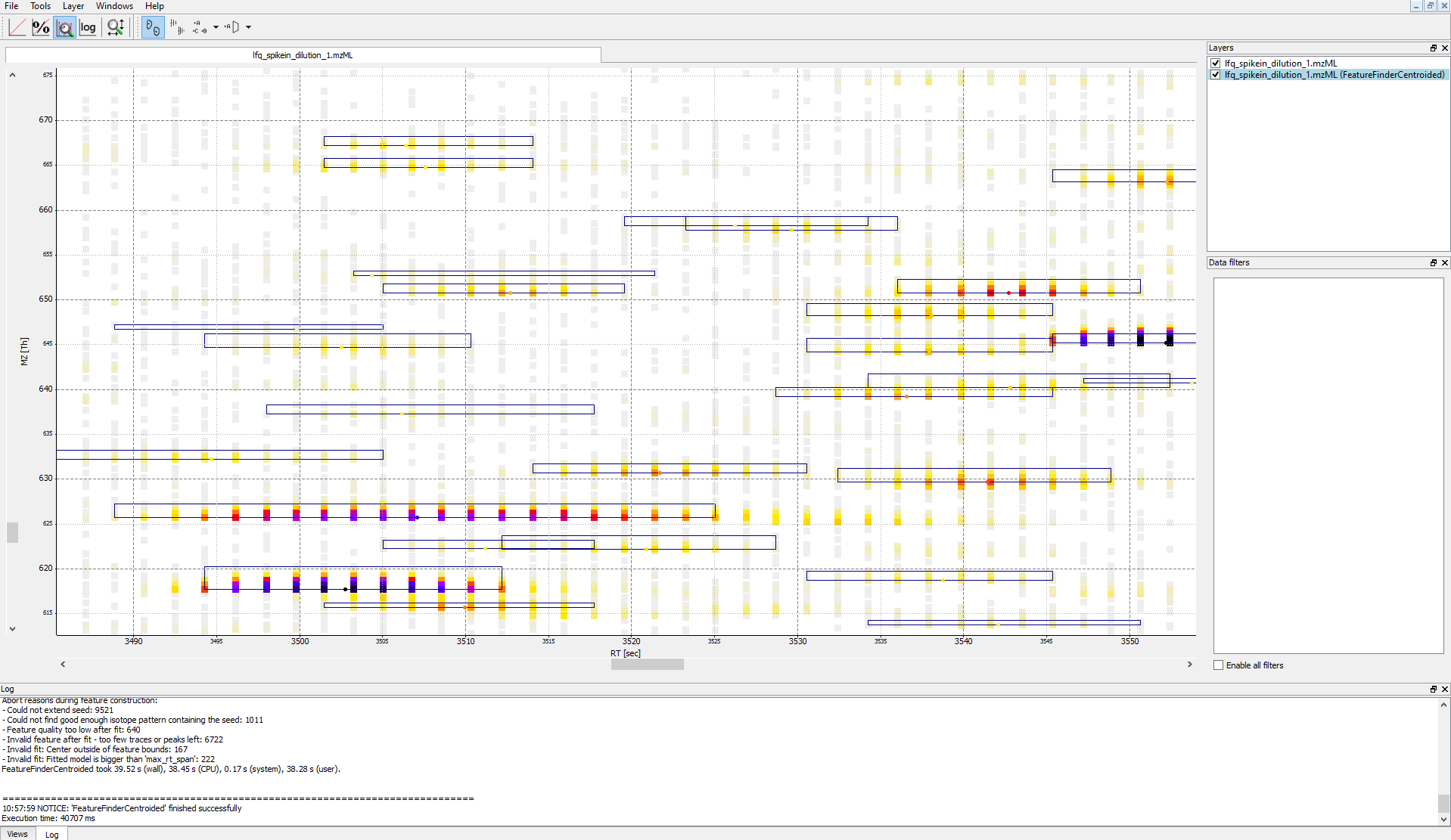

The output file .featureXML can be visualized with TOPPView on top of the used .mzML file -

in a so called layer - to look at the identified features.

First start TOPPView and open the example .mzML file (see Fig. 28). Afterwards open the .featureXML output as new layer (see Fig. 29). The overlay is depicted in Figure 30. The zoom of the .mzML - .featureXML overlay shows the individual mass traces and the assembly of those in a feature (see Fig. 31).

The workflow can be extended for multi-file analysis, here an Input Files is to be used

instead of the Input File. In front of the FeatureFinderMetabo a ZipLoopStart and behind

ZipLoopEnd has to be used, since FeatureFinderMetabo will analyis on file to file bases.

To facilitate the collection of features corresponding to the same compound ion across

different samples, an alignment of the samples’ feature maps along retention time is

often helpful. In addition to local, small-scale elution differences, one can often see

constant retention time shifts across large sections between samples. We can use linear

transformations to correct for these large scale retention differences. This brings the majority of

corresponding compound ions close to each other. Finding the correct corresponding

ions is then faster and easier, as we don’t have to search as far around individual

features.

| parameter | value |

| algorithm →max_num_peaks_considered | −1 |

| algorithm →superimposer →mz_pair_max_distance | 0.005 |

| algorithm →superimposer →num_used_points | 10000 |

| algorithm →pairfinder →distance_RT →max_difference | 20.0 |

| algorithm →pairfinder →distance_MZ →max_difference | 20.0 |

| algorithm →pairfinder →distance_MZ →unit | ppm |

MapAlignerPoseClustering provides an algorithm to align the retention time scales of multiple

input files, correcting shifts and distortions between them. Retention time adjustment may be

necessary to correct for chromatography differences e.g. before data from multiple LC-MS runs

can be combined (feature linking). The alignment algorithm implemented here is the pose

clustering algorithm.

The parameters change the behavior of MapAlignerPoseClustering as follows:

The next step after retention time correction is the grouping of corresponding features in multiple samples. In contrast to the previous alignment, we assume no linear relations of features across samples. The used method is tolerant against local swaps in elution order.

| parameter | value |

| algorithm →distance_RT →max_difference | 40.0 |

| algorithm →distance_MZ →max_difference | 20.0 |

| algorithm →distance_MZ →unit | ppm |

The parameters change the behavior of FeatureLinkerUnlabeledQT as follows (similar to the parameters we adjusted for MapAlignerPoseClustering):

You should find a single, tab-separated file containing the information on where metabolites were found and with which intensities. You can also add Output Folder nodes at different stages of the workflow and inspect the intermediate results (e.g., identified metabolite features for each input map). The complete workflow can be seen in Figure 34. In the following section we will try to identify those metabolites.

The FeatureLinkerUnlabeledQT output can be visualized in ToppView on top of the input and output of the FeatureFinderMetabo (see Fig 35).

At the current state we found several metabolites in the individual maps but so far don’t know what they are. To identify metabolites OpenMS provides multiple tools, including search by mass: the AccurateMassSearch node searches observed masses against the Human Metabolome Database (HMDB)[14, 15, 16]. We start with the workflow from the previous section (see Figure 34).

The result of the AccurateMassSearch node is in the mzTab format [17] so you can easily open it in a text editor or import it into Excel or KNIME, which we will do in the next section. The complete workflow from this section is shown in Figure 36.

The result from the TextExporter node as well as the result from the AccurateMassSearch

node are files while standard KNIME nodes display and process only KNIME tables. To convert

these files into KNIME tables we need two different nodes. For the AccurateMassSearch results

we use the MzTabReader node ()

and its Small Molecule Section port. For the result of the TextExporter we use the

ConsensusTextReader (

).

When executed, both nodes will import the OpenMS files and provide access to the data as

KNIME tables. The retention time values are exported as a list using the MzTabReader based

on the current PSI-Standard. This has to be parsed using the SplitCollectionColumn, which

outputs a ”Split Value 1” based on the first entry in the rention time list, which has to be

renamed to retention time using the ColumnRename. You can now combine both tables using the

Joiner node () and

configure it to match the m/z and retention time values of the respective tables. The full

workflow is shown in Figure 37.

Metabolites commonly co-elute as ions with different adducts (e.g., glutathione+H, glutathione+Na) or with charge-neutral modifications (e.g., water loss). Grouping such related ions allows to leverage information across features. For example, a low-intensity, single trace feature could still be assigned a charge and adduct due to a matching high-quality feature. Such information can then be used by several OpenMS tools, such as AccurateMassSearch, for example to narrow down candidates for identification.

For this grouping task, we provide the MetaboliteAdductDecharger node. Its method explores the combinatorial space of all adduct combinations in a charge range for optimal explanations. Using defined adduct probabilities, it assigns co-eluting features having suitable mass shifts and charges those adduct combinations which maximize overall ion probabilities.

The tool works natively with featureXML data, allowing the use of reported convex hulls. On such a single-sample level, co-elution settings can be chosen more stringently, as ionization-based adducts should not influence the elution time: Instead, elution differences of related ions should be due to slightly differently estimated times for their feature centroids.

Alternatively, consensusXML data from feature linking can be converted for use, though with less chromatographic information. Here, the elution time averaging for features linked across samples, motivates wider co-elution tolerances.

The two main tool outputs are a consensusXML file with compound groups of related input ions, and a featureXML containing the input file but annotated with inferred adduct information and charges.

Options to respect or replace ion charges or adducts allow for example:

Task

A modified metabolomics workflow with exemplary MetaboliteAdductDecharger use and

parameters is provided in. Run

the workflow, inspect tool outputs and compare AccurateMassSearch results with and without

adduct grouping.

Now that you have your data in KNIME you should try to get a feeling for the capabilities of KNIME.

Task

Check out the Molecule Type Cast node ()

together with subsequent cheminformatics nodes (e.g. RDKit From Molecule (

)) to

render the structural formula contained in the result table.

Task

Have a look at the Column Filter node to reduce the table to the interesting columns, e.g., only the Ids, chemical formula, and intensities.

Task

Try to compute and visualize the m/z and retention time error of the different feature elements (from the input maps) of each consensus feature. Hint: A nicely configured Math Formula (Multi Column) node should suffice.

Identifying metabolites using only the accurate mass may lead to ambiguous results. In practice, additional information (e.g. the retention time) is used to further narrow down potential candidates. Apart from MS1-based features, tandem mass spectra (MS2) of metabolites provide additional information. In this part of the tutorial, we take a look on how metabolite spectra can be identified using a library of previously identified spectra.

Because these libraries tend to be large we don’t distribute them with OpenMS.

Task

Construct the workflow as shown in Fig. 39. Use the file as

input for your workflow. You can use the spectral library from

as second input. The first input file contains tandem spectra that are identified by the

MetaboliteSpectralMatcher. The resulting mzTab file is read back into a KNIME table The

retention time values are exported as a list based on the current PSI-Standard. This has to be

parsed using the SplitCollectionColumn, which outputs a ”Split Value 1” based on the

first entry in the rention time list, which has to be renamed to retention time using

the ColumnRename before it is stored in an Excel table. Make sure that you connect

the MzTabReader port corresponding to the Small Molecule Section to the Excel

writer (XLS). Please select the ”add column headers” option in the Excel writer

(XLS)).

Run the workflow and inspect the output.

In metabolomics, matches between tandem spectra and spectral libraries are manually validated. Several commercial and free online resources exist which help in that task. Some examples are:

Here, we will use METLIN to manually validate metabolites.

Task

Check in the .xlsx output from the Excel writer (XLS) if you can find glutathione. Use the

retention time column to find the spectrum in the mzML file. Here open the file in the

in

TOPPView. The MSMS spectrum with the retention time of 67.6 s is used as example. The

spectrum can be selected based on the retention time in the scan view window. Therefore the

MS1 spectrum with the retention time of 66.9 s has to be double clicked and the MSMS spectra

recorded in this time frame will show up. Select the tandem spectrum of Glutathione, but do

not close TOPPView, yet.

Task

On the METLIN homepage search for

Glutathione using the

(https://metlin.scripps.edu/landing_page.php?pgcontent=advanced_search). Note that

free registration is required. Which collision energy (and polarity) gives the best (visual) match

to your experimental spectrum in TOPPView? Here you can compare the fragmentation

patterns in both spectra shown by the Intensity or relative Intensity, the m/z of a peak and the

distance between peaks. Each distance between two peaks corresponds to a fragment of

elemental composition (e.g., NH2 with the charge of one would have mass of two peaks of

16.023 Th).

Another method for MS2 spectra-based metabolite identification is de novo identification. This approach can be used in addition to the other methods (accurate mass search, spectral library search) or individually if no spectral library is available. In this part of the tutorial, we discuss how metabolite spectra can be identified using de novo tools. To this end, the tools SIRIUS and CSI:FingerID ([18, 19, 20]) were integrated in the OpenMS Framework as SiriusAdapter. SIRIUS uses isotope pattern analysis to detect the molecular formula and further analyses the fragmentation pattern of a compound using fragmentation trees. CSI:FingerID is a method for searching a fingerprint of a small molecule (metabolite) in a molecular structure database.

The node is able to work in different modes depending on the provided input.

The last approach is the preferred one, as SIRIUS gains a lot of additional information by using the OpenMS tools for preprocessing.

Task

Construct the workflow as shown in Fig. 42.

Use the file as

input for your workflow.

Run the workflow and inspect the output.

The output consists of two mzTab files and an internal .ms file. One mzTab for SIRIUS and the other for the CSI:FingerID. These provide information about the chemical formula, adduct and the possible compound structure. The information is referenced to the spectrum used in the analysis. Additional information can be extracted from the SiriusAdapter by setting an ”out_workspace_directory”. Here the SIRIUS workspace will be provided after the calculation has finished. This workspace contains information about annotated fragments for each successfully explained compound.

In this part of the metabolomics session we take a look at more advanced downstream analysis and the use of the statistical programming language R. As laid out in the introduction we try to detect a set of spike-in compounds against a complex blood background. As there are many ways to perform this type of analysis we provide a complete workflow.

Task

Import the workflow from in

KNIME:

The section below will guide you in your understanding of the different parts of the workflow. Once you understood the workflow you should play around and be creative. Maybe create a novel visualization in KNIME or R? Do some more elaborate statistical analysis? Note that some basic R knowledge is required to fully understand the processing in R Snippet nodes.

This part is analogous to what you did for the simple metabolomics pipeline.

The first part is identical to what you did for the simple metabolomics pipeline. Additionally, we convert zero intensities into NA values and remove all rows that contain at least one NA value from the analysis. We do this using a very simple R Snippet and subsequent Missing Value filter node.

Task

Inspect the R Snippet by double-clicking on it. The KNIME table that is passed to an R Snippet node is available in R as a data.frame named knime.in. The result of this node will be read from the data.frame knime.out after the script finishes. Try to understand and evaluate parts of the script (Eval Selection). In this dialog you can also print intermediary results using for example the R command head(knime.in) or cat(knime.in) to the Console pane.

After we linked features across all maps, we want to identify features that are significantly deregulated between the two conditions. We will first scale and normalize the data, then perform a t-test, and finally correct the obtained p-values for multiple testing using Benjamini-Hochberg. All of these steps will be carried out in individual R Snippet nodes.

KNIME supports multiple nodes for interactive visualization with interrelated output. The nodes used in this part of the workflow exemplify this concept. They further demonstrate how figures with data dependent customization can be easily realized using basic KNIME nodes. Several simple operations are concatenated in order to enable an interactive volcano plot.

Task

Inspect the nodes of this section. Customize your visualization and possibly try to visualize other aspects of your data.

R Dependencies: This section requires that the R packages ggplot2 and ggfortify are both installed. ggplot2 is part of the KNIME R Statistics Integration (Windows Binaries) which should already be installed via the full KNIME installer, ggfortify however is not. In case that you use an R installation where one or both of them are not yet installed, add an R Snippet node and double-click to configure. In the R Script text editor, enter the following code:

You can remove the install.packages commands once it was successfully installed.

Even though the basic capabilities for (interactive) plots in KNIME are valuable for initial data exploration, professional looking depiction of analysis results often relies on dedicated plotting libraries. The statistics language R supports the addition of a large variety of packages, including packages providing extensive plotting capabilities. This part of the workflow shows how to use R nodes in KNIME to visualize more advanced figures. Specifically, we make use of different plotting packages to realize heatmaps.

Following the identification, quantification and statistical analysis our data is merged and formatted for reporting. First we want to discard our normalized and logarithmized intensity values in favor of the original ones. To this end we first remove the intensity columns (Column Filter) and add the original intensities back (Joiner). For that we use an Inner Join 2 with the Joiner node. In the dialog of the node we add two entries for the Joining Columns and for the first column we pick ”retention_time” from the top input (i.e. the AccurateMassSearch output) and ”rt_cf” (the retention time of the consensus features) for the bottom input (the result from the quantification). For the second column you should choose ”exp_mass_to_charge” and ”mz_cf” respectively to make the joining unique. Note that the workflow needs to be executed up to the previous nodes for the possible selections of columns to appear.

Question

What happens if we use a Left Outer Join, Right Outer Join or Full Outer Join instead of the Inner Join?

Task

Inspect the output of the join operation after the Molecule Type Cast and RDKit molecular structure generation.

While all relevant information is now contained in our table the presentation could be improved. Currently, we have several rows corresponding to a single consensus feature (=linked feature) but with different, alternative identifications. It would be more convenient to have only one row for each consensus feature with all accurate mass identifications added as additional columns. To this end, we use the Column to Grid node that flattens several rows with the same consensus number into a single one. Note that we have to specify the maximum number of columns in the grid so we set this to a large value (e.g. 100). We finally export the data to an Excel file (XLS Writer).

OpenSWATH [21] allows the analysis of LC-MS/MS DIA (data independent acquisition)

data using the approach described by Gillet et al. [22]. The DIA approach described

there uses 32 cycles to iterate through precursor ion windows from 400-426 Da to

1175-1201 Da and at each step acquires a complete, multiplexed fragment ion spectrum

of all precursors present in that window. After 32 fragmentations (or 3.2 seconds),

the cycle is restarted and the first window (400-426 Da) is fragmented again, thus

delivering complete “snapshots” of all fragments of a specific window every 3.2 seconds.

The analysis approach described by Gillet et al. extracts ion traces of specific fragment ions from all MS2 spectra that have the same precursor isolation window, thus generating data that is very similar to SRM traces.

OpenSWATH has been fully integrated since OpenMS 1.10 [4, 2, 23, 24, 25]).

mProphet (http://www.mprophet.org/) [26] is available as standalone script in

. R

(http://www.r-project.org/) and the package MASS (http://cran.r-project.org/web/packages/MASS/)

are further required to execute mProphet. Please obtain a version for either Windows, Mac or

Linux directly from CRAN.

PyProphet, a much faster reimplementation of the mProphet algorithm is available from PyPI

(https://pypi.python.org/pypi/pyprophet/). The usage of pyprophet instead of mProphet

is suggested for large-scale applications.

mProphet will be used in this tutorial.

OpenSWATH requires an assay library to be supplied in the TraML format [27]. To

enable manual editing of transition lists, the TOPP tool TargetedFileConverter is

available, which uses tab separated files as input. Example datasets are provided in

.

Please note that the transition lists need to be named .tsv.

The header of the transition list contains the following variables (with example values in brackets):

For further instructions about generic transition list and assay library generation please see

http://openswath.org/en/latest/docs/generic.html.

To convert transitions lists to TraML, use the TargetedFileConverter: Please use the absolute

path to your OpenMS installation.

In addition to the target assays, OpenSWATH requires decoy assays in the library which are later used for classification and error rate estimation. For the decoy generation it is crucial that the decoys represent the targets in a realistic but unnatural manner without interfering with the targets. The methods for decoy generation implemented in OpenSWATH include ’shuffle’, ’pseudo-reverse’, ’reverse’ and ’shift’. To append decoys to a TraML, the TOPP tool OpenSwathDecoyGenerator can be used: Please use the absolute path to your OpenMS installation.

An example KNIME workflow for OpenSWATH is supplied in

(Fig. 44). The example dataset can be used for this workflow (filenames in brackets):

The resulting output can be found at your selected path, which will be used as input for

mProphet. Execute the script on the Terminal (Linux or Mac) or cmd.exe (Windows) in

.

Please use the absolute path to your R installation and the result file:

or for windows

The main output will be called

with statistical information available in.

Please note that due to the semi-supervised machine learning approach of mProphet the results

differ slightly when mProphet is executed several times.

Additionally the chromatrogam output (.mzML) can be visualized for inspection with

TOPPView.

For additional instructions on how to use pyProphet instead of mProphet please have a look at the

PyProphet Legacy Workflow http://openswath.org/en/latest/docs/pyprophet_legacy.html.

If you want to use the SQLite-based workflow in your lab in the future, please have a look here:

http://openswath.org/en/latest/docs/pyprophet.html. The SQLite-based workflow will

not be part of the tutorial.

The sample dataset used in this tutorial is part of the larger SWATH MS Gold Standard (SGS) dataset which is described in the publication of Roest et al. [21]. It contains one of 90 SWATH-MS runs with significant data reduction (peak picking of the raw, profile data) to make file transfer and working with it easier. Usually SWATH-MS datasets are huge with several gigabyte per run. Especially when complex samples in combination with large assay libraries are analyzed, the TOPP tool based workflow requires a lot of computational resources. Additional information and instruction can be found at http://openswath.org/en/latest/.